Examples from a development project (2015/2016) at The National museum of Art, Architecture and Design in Oslo, section for Digital Collection Management.

At the national museum in Oslo, we have recently completed a project where we trained computer algorithms on principal component analysis in our art collection, hence the project name: Principal Components.

Among our core questions in Principal Components were: Can we teach algorithms to produce reusable classifications? In terms of the latter, one of our main objectives was to train an algorithm on Iconclass. It is a significant international authority list of iconographical art history terms. Regrettably, we lack a full Norwegian translation, and we have therefore not yet incorporated Iconclass as part of the museum cataloguing routine. Parts of our catalogue records also lack overall concepts in general; overall describing concepts had less of a purpose in older, analogue catalogues. As catalogue information has morphed from being primarily for internal curatorial use into also being a digital source for publishing collection data online, we now necessitate numerous types of overarching categories.

Pre-test

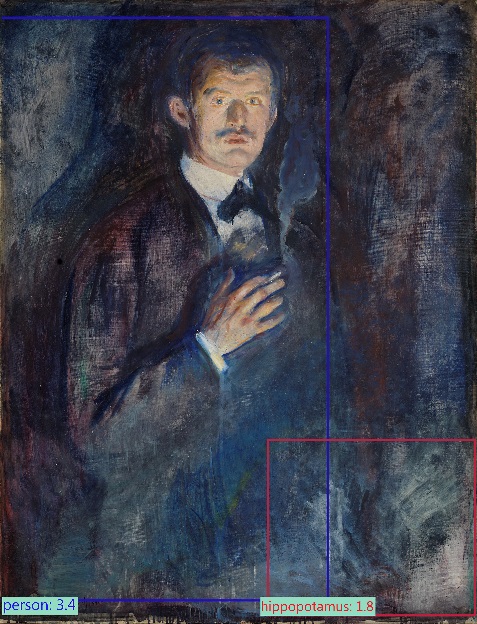

Before we started working on Iconclass, we tested our theories with algorithms specialized on for example faces, styles and animal motifs. The computer findings sometimes resulted in uncannily exact, and at the same time useless (in the conventional manner) terms. In our oil painting Self-portrait with cigarette (1895) by Edvard Munch (1863 -1944), the algorithm easily recognized a human figure. Apparently, a hippopotamus is also lingering in the corner, cast in shadows and literally invisible to the human eye. Fig 1.

Illustration source: The National museum of Art, Architecture and Design/ Audun Mathias Øygard.

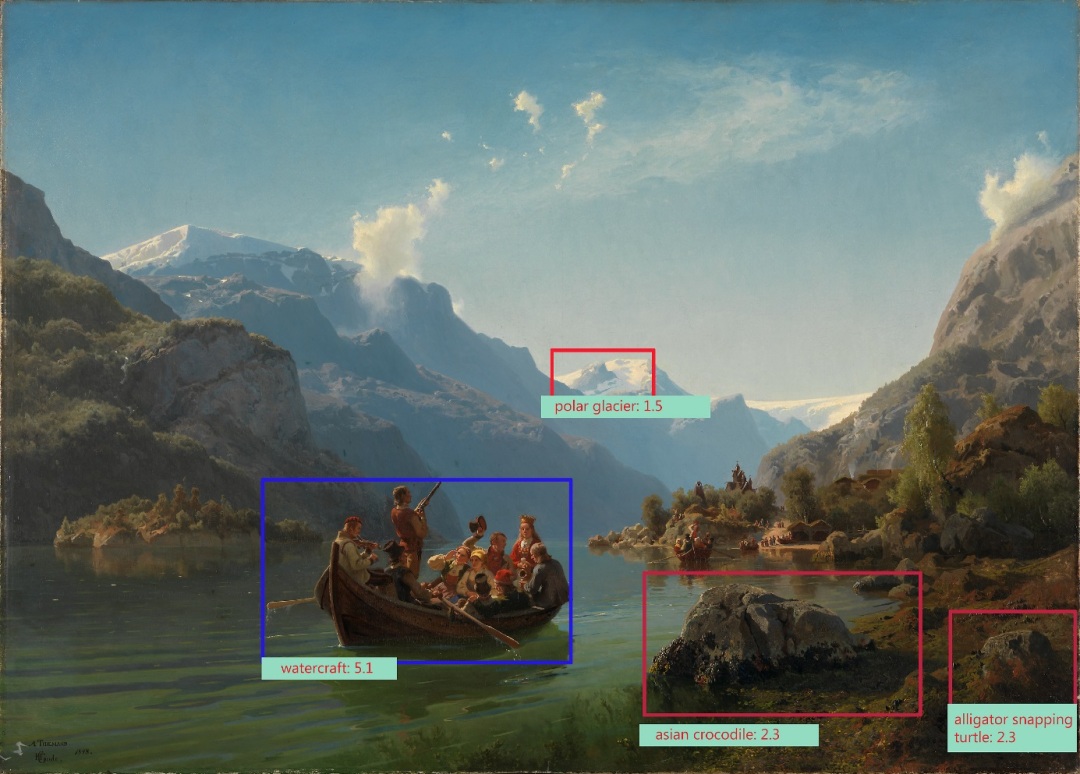

Another example of eccentric findings showed up in our iconic, painting Bridal procession on the Hardangerfjord (1848) by Adolph Tidemann (1814 -1876) and Hans Gude (1825 -1903). Here the algorithm clearly identified the boat and the glacier in the background. However, in its context free gaze, it also located exotic figures never known to have Norway as its habitat, like a crocodile and a turtle. Fig 2.

Over the last few years, we have repeatedly experienced that good metadata, both qualitative and quantitative, are fundamental in facilitating use of and providing access to the museum collections. However, we cannot prioritize to register all catalogued documentation at once. At the same time, we want to crosscheck both old and new information, so that it is reliable and correct when published. In this respect, we asked ourselves if recent developments within the fields of artificial intelligence (AI) and machine learning could be applied to art history conventions? Considering the fact that computer algorithms are increasingly becoming more capable at classifying images – in the past year reaching near human skills within certain domains. Our thought was that with help from the many new art collections online, this should be possible.

Iconclass –test

We wanted to investigate if there was any way to automatically uncover, translate and implement the lacking Iconclass terms in our dataset. As it turned out, we found fewer easily accessible, big datasets of this kind than we expected. An exception to this was the useful research foundation in the Arkyves database[1]. Their enthusiastic specialists granted us access to search through larger Iconclass structured datasets from collections similar to our own. However, the methodology we chose turned out to be less successful for the more specific Iconclass categories.

There are more than 28000 specific categories in Iconclass, many of which we had few, or even no, examples of. We therefore investigated training a classifier on higher levels of categories in the Iconclass hierarchy, since we hoped we could then use all examples from the subcategories as examples of the higher level category. Though what we found when we investigated the Iconclass hierarchy tree, was that objects that are visually similar, was found to reside in widely different high-level categories, and some were even in multiple different categories, depending on what context they appeared in. One example is this: While the class “Dog” (ID:34B11) resides in the subtree of the main category “Human being, Man in general”, the visually similar “Fox” (ID:25F23) resides in the subtree of the main category “Nature”. In addition to this, Iconclass also has the class “Fox and hounds” (ID:43C1112311), which would overlap with both of these, but resides in another main category “Society, Civilization, Culture” and concerns foxes and dogs in the context of fox-hunting. We concluded that to the algorithm, the hierarchical structure was problematic wherein the subcategories did not necessarily correspond to the main categories in a visually comprehensible and simple machine-readable manner. While it might be possible to train a classifier on such a hierarchy, it would likely require a much larger set of examples than what we had available. After a thorough analysis of this and other issues related to costs and copyrights, we realized that we had to let go further developing the algorithm on Iconclass’ more specific branches.

The analysis of the main categories, on the other hand, worked well. We therefore decided to further train the algorithm on the Rijksmuseum’s collection, and the 300,000 artworks available. With the automatic generation of the most common subject keywords, we can now choose to include missing descriptive metadata in our catalogue. See examples of the functioning categories (so far) in fig. 3 Iconclass below.

| Iconclass ID | Iconographic subject (textual correlate). | Iconclass ID | Iconographic subject (textual correlate). |

| 0 | abstract, non-representational | 46C13141 | horse |

| 11G | angels | 25H | landscapes |

| 25F | animals | 25H2321 | ocean |

| 45H3 | battle | 41B1 | open fire (in general) |

| 25H133 | beach | 25G | plants |

| 7 | bible | 46C131 | riding a horse, ass, or mule, horseman |

| 25F3 | birds | 25H213 | river |

| 46C232 | boat, canoe, etc. | 46C24 | sailing-ship/boat |

| 43B141 | botanizing | 47I213 | sheep |

| 47I211 | bovine cattle | 43C245 | skis (winter sports) |

| 41A12 | castle | 26D1 | snow |

| 31D112 | child | 43C | sports, games and physical performances |

| 43C7 | children’s games and plays | 31A | the nude human figure |

| 11D | christ | 11F | the virgin mary |

| 11Q712 | church (exterior) | 48C85 | theatre, theatrical performance |

| 25I | city-view | 25G3 | trees |

| 25I3 | farm or house in landscape | 41E | still life of miscellaneous objects |

| 25H15 | forest, wood | 46C13141 | horse |

| 25H1111 | glacier | 25H | landscapes |

| 61B | historical persons | 25H2321 | ocean |

As a last step, we mapped the operational Iconclass’ motif types against the National Museum’s own subject keyword lists, as well as against national lists from the Norwegian Feltkatalogen[1]. Through the reuse of mechanically produced classifications, structured according to art historical iconography in Iconclass, we found an essential conventional utility in the algorithm’s basic information retrieval from the image files. With this result, we look forwards to further studies within this field. By trying to engage with new possibilities like machine vision, we hope to contribute to building new understanding between the fields of machine learning and art history. Nevertheless – the core of our efforts is still to promote the important value of the never-ending documentation work carried out in museums every day.

The project is supported by the Arts Council Norway, and developed and realized in close collaboration with the data scientist Audun Mathias Øygard and Bengler.

For more results from the project Principal Components, see:

- The National museum’s collection visualized with help of algorithm’s: http://vy.nasjonalmuseet.no/?lang=eng#

- Short description of the project Principal Components: http://nasjonalmuseet.no/en/collections_and_research/collection_management/digital_collection_management/Project+Principal+Components.b7C_wJjU4L.ips#sthash.pJFIRPd9.dpuf

- For more technical descriptions from the project’s Data scientists: http://bengler.no/principalcomponents

Author

Gro Benedikte Pedersen (1973): Holds a Cand. philol. degree in art history from the University of Oslo (2002). Works as a professional coordinator for the Digital collection management at the National Museum of Art, Architecture and Design in Norway.

[1] The lists Liste A.2.5 Motivtype B og A.2.5 Motivtype F. in: Feltkatalog for kunst- og kulturhistoriske museer, Norsk Museumsutvikling (NMU), 2002. http://issuu.com/norsk_kulturrad/docs/feltkatalog?mode=window&viewMode=doublePage

Thank you, please comment in English on this forum. This concerns a link to a conference in Zweden, where this project was presented.

LikeLike